“The three most important numbers in a person’s life are your social security number, your phone number, and your FICO score,” said Teddy Flo, Zest AI’s Chief Legal Officer, during a panel discussion at Fintech Nexus USA 2022.

“Half of all white Americans have a FICO score above 700, while one in five black people have a FICO score above 700. That is not a reflection of credit risk; that is a reflection of historical racism. And there’s no other way to get around it.”

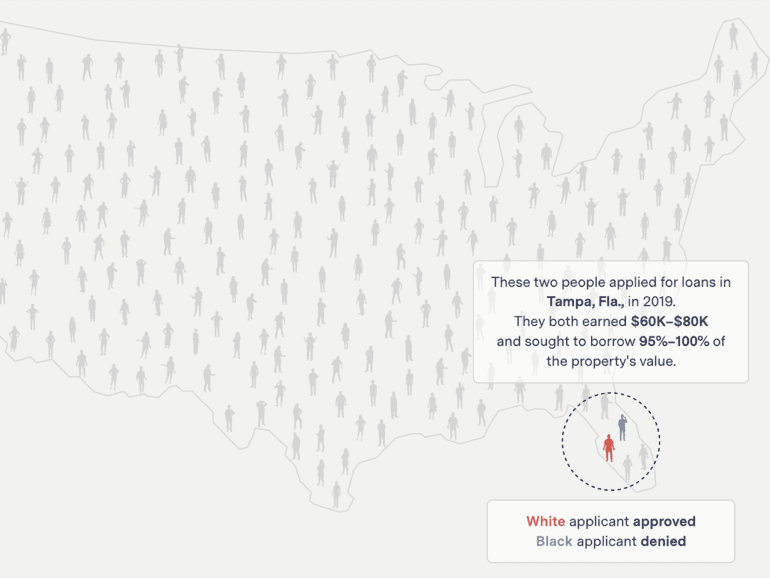

Bias in credit underwriting is a genuine thing. Although the sector has turned to algorithms and AI to standardize the process, it continues to exist. Using data points saturated with historical discrimination against race and gender, the issue risks being exacerbated if not treated with specific measures to counteract it.

According to The Mark Up, nationally, loan applicants of color were 40%-80% more likely to be denied than their white counterparts; in certain areas, the disparity was more significant than 250%.

To counteract this, multiple organizations are working towards improving conditions. Developments in AI and machine learning (ML) are being made, working towards closing the gap in credit decisions.

“It’s just a matter of using better math and more data. This enables our customers to increase approvals between 15% and 30%, with no increase in risk, or decrease losses by between 30% and 50%, with no decrease in approval rate.” continued Flo.

Increased engagement of regulators will drive dev forward

Regulators have, for some time, been wary of AI. Concerned by the lack of transparency and accountability, they only began attempting to regulate the space in a way that matches its development.

“With innovation comes the regulatory concern,” said Rebecca Kuehn, Partner at Hudson Cook. “There’s a lot of focus on algorithmic discrimination. This is the new “boogeyman” word. We’re concerned about what AI is doing, and we’re concerned about ML.”

Focusing on AI innovation and how it would best serve communities, Kuehn believes that regulatory engagement is essential in the future.

“We’re operating under statutes so many years old, using technologies that weren’t contemplated at the time. There’s a real lag there. Some people are reticent to try this because you’re affirmatively using characteristics you would normally want to be completely blind to engage in lending.”

Although statutes such as the Equal Credit Opportunity Act have been implemented in the past, it is the rework of the measures such statutes put in place which may be vital in improving issues with historical data bias.

The role of adversarial debiasing

The traditional way of underwriting has mainly used algorithms, assessing the potential risk of the applicants and awarding FICO scores based on the outcome.

Flo explained adversarial debiasing works to counteract the effects of data bias. The model Zest implements inside current regulation involve a secondary algorithmic model with access to race and gender data that runs alongside the primary model.

“You have the underwriting model, start scoring applicants using the training data,” he explained. “And then it sends the scores to the second model with access to race data. The second model is looking for a correlation between the risk score you assign a borrower and their race or gender.”

The two models run data back and forth, attempting to create a neutral environment future models can use for credit descisioning.

Alternative data makes a powerful difference

In addition, Flo explained it was essential to implement additional ML to create access to alternative data.

“Algorithmic bias is very real. There’s no getting around it,” said Flo. “Machine learning, rather than being the “boogeyman”- the thing that’s going to make it worse can make it better. Because it’s machine learning that unlocks the power of alternative data.”

“You need an algorithm that can consider alternative data when it’s available and consider traditional data when that’s the only data available and still lead to good lending outcomes.”

Alternative data constitutes the data points that aren’t specifically relevant to the current decision on credit but can assist in creating a broader picture of the applicant.

Compared to older methods of algorithmic underwriting, which could only assess 25 data points, machine learning could open out the process to 1000. “If I’m trying to underwrite a loan for your listeners, and I have 25 data points, I’m going to have one picture; if I have 1000, I’m gonna get a much more accurate picture of credit risk,” he continued.

“I think there’s also a moment where we have to start thinking creatively. In terms of other data points that could be used,” said Ulysses Smith, Head of Global Impact, Equity, and Belonging at Blend. “We’ve seen other things emerge over the last year or so, like rental payments being included. But there are so many other things we could think of if we all sat down collectively and just said, this will probably help paint a better picture of a borrower than we currently have.”

AI and Machine Learning are the future

Although much work and engagement are needed to create an environment that moves away from past inequality, many are hopeful for the future.

“These problems are structural,” said Smith. “We didn’t create them overnight; they took decades to come to life. And it’s going to take some time to make sure we address them.”

“We must still address some of the root causes of inequity while building these models. The difficult piece is working with regulators to move at the same pace. But we should position this so that AI is not considered the boogeyman moving forward.”

“We know it can be used as a tool; it can be manipulated negatively if people choose to do that. But with the right checks and with people in place, who are interested in making this better for everyone else, we can make this happen. We can build this better future.”