Once seen only in science fiction, the idea of widespread Artificial Intelligence (AI) application is becoming ever more of a reality.

Increasingly, AI and machine learning are being applied to financial tools, with many suggesting that the technology could be key to making a more inclusive, accessible system.

Media outlets and experts herald its capabilities in speeding up applications and mitigating risk. In one month, late 2022, the world saw the release of three groundbreaking advancements (Chat GPT, CICERO, and Stable Diffusion), sending investment in the sector into a giddy frenzy.

As has been the case in many sectors, regulation is falling behind innovation. While the deficit isn’t perhaps as well publicized as in the crypto space, it could have equally devastating consequences.

In the latest Oxford Insights report on Government Readiness for AI, CEO Richard Stirling stated, “We need governments to roll out responsive regulatory regimes rapidly. The hugely significant advances in AI increase the risk of the technologies being harnessed by bad actors or creating services that society is not ready to deal with.”

Creepy generative AI conversations that fulfill every science fiction nightmare could be the least of our worries, so is the world really ready?

The elephant in the room

No report on the development of AI is now complete without some discussion of ChatGPT.

The tool has taken the world by storm, with stories emerging that could disturb even the least interested in science fiction.

On a more practical level, users worldwide are putting the tool to work. Students are asking for essays to be written, lovers are requesting Valentine’s poems, and mathematicians are asking for the answers to complicated equations. The more data is imputed, the more the tool learns, improving its effectiveness.

In finance, people are debating its potential application. Financial advisory assistance and customer service chatbots have the most obvious benefits, with capabilities to streamline decision-making and processes.

Meanwhile, finance giants such as JP Morgan have already restricted employee access to the tool due to “compliance concerns.”

However, on the whole, financial leaders’ excitement about technology is muted. “Our teams are looking at ChatGPT. We’ve done a lot of experimentation with it,” said Ken Meyer, chief information and experience officer at Truist to American Banker.

“If you need to write a term paper right now, it’s pretty phenomenal. But you’re talking about a language-based platform that basically makes up stuff based on everything feeding it. So in a highly regulated industry, I don’t suspect that ChatGPT will be answering questions for clients anytime soon.”

Global Readiness high in US and Singapore

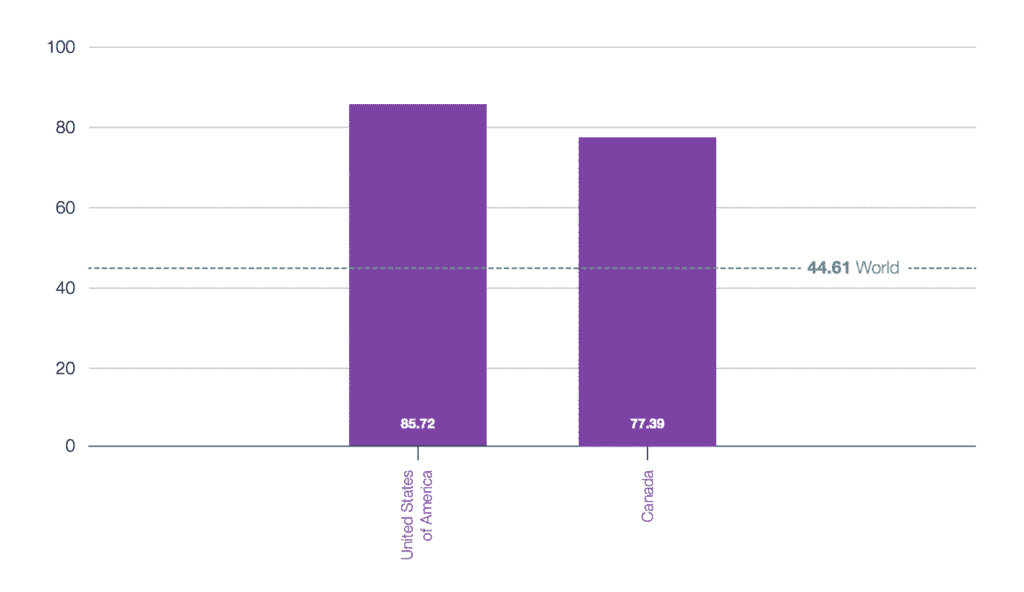

The Oxford insights report conducted its evaluation by considering three pillars to assess AI readiness; government, technology, and data and infrastructure.

The U.S. and Singapore vied for first place in the report, followed in third by the UK.

Singapore’s rating came in strong on the data and government pillars and excelled particularly due to their government’s willingness and capability to solve problems with AI. The country has embraced the technology, with the government releasing its National AI strategy in 2019.

However, the biggest drawback for Singapore comes in its ranking for governance and ethics, which Oxford Insights said may indicate a need for more legislative controls to protect citizens from harm.

The U.S. advantage lies within the country’s innovation capacity and the maturity of its technology sector.

The Oxford Insights regional expert for Northern America, Merve Hickok, Research Director at the Center for AI and Digital Policy, noted that the U.S.’s investment in research and development had played a critical role in strengthening the nation’s position. The U.S. is the world leader in the number of AI unicorns.

Also working in the U.S.’s favor is an apparent cross-party consensus, which the study found could drive ongoing development and application despite changes in the political landscape. The Blueprint for an AI Bill of rights was published in mid-2022, along with an AI Training Act paving the way for regulation within the space.

Hickok was, however, concerned about the potential the space has for “anti-competitive behavior,” where large companies with more computing power and access to larger data sets could monopolize the space. He said regulators are targeting this area but explained that even strict rules are unlikely to impact certain fundamental areas.

Explainability and Bias

Perhaps the area that has the most risk of affecting your average, everyday human being is the explainability and bias of AI. This is an area many of the technology implementors are already aware of.

AI bias could be an issue due to its ability to “bake in” biases already prevalent in the financial system. This is particularly prominent in access to credit.

While many nations have set out AI strategies, AI-specific legislation is still deficient. While ranking high in the “AI Readiness index,” North America only approached AI from a regulatory standpoint last year, releasing a few use-case-specific rules. Many of these focused on the potential bias of AI systems. However, the financial system is yet to be explicitly targeted by these rules.

While the U.S. plans to implement broader rules targeting all consumer-facing AI in 2023, Europe is the first jurisdiction to do so. The EU’s AI Act, introduced in 2021 but projected to pass into law in early 2023, attempts to categorize AI applications that could pose a risk of harm to citizens.

According to the categorization of the AI activity, different regulatory constraints, checks, and bans will be put in place to mitigate the risk. Although the EU has deemed most of the AI applications in the EU to be of low risk, Thierry Breton, the European Commissioner for the Internal Market, told Reuters that “transparency is important about the risk of bias and false information.”

AI is sometimes considered a “black box,” which many have found concerning, where a lack of transparency in how the AI has made decisions makes the outcome difficult to explain.

In the U.S., AI usage in credit decisions is monitored using federal laws that, while they don’t specifically focus on AI, mean the explainability of decisions is essential.

Experts say that the bias of AI is only as prevalent as the data bias that feeds it. For many, the rise of open finance and access to alternative data available could help mitigate strong influences of historical bias.

As AI develops with more powerful elements of machine learning, experts are optimistic this bias can be rectified.

“It’s machine learning that unlocks the power of alternative data,” said Teddy Flo, Chief Legal Officer at Zest AI. “Machine learning can take in more data points…so that you can get a more holistic picture of the people you’re underwriting.”