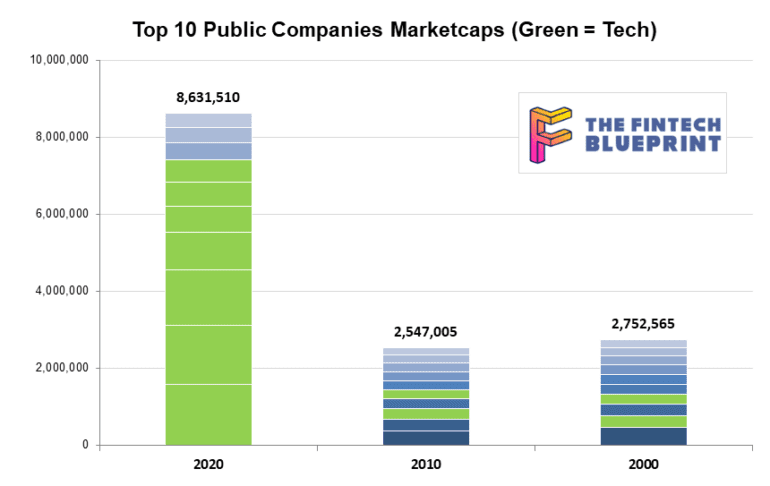

This week, we look at a breakthrough artificial intelligence release from OpenAI, called GPT-3. It is powered by a machine learning algorithm called a Transformer Model, and has been trained on 8 years of web-crawled text data across 175 billion parameters. GPT-3 likes to do arithmetic, solve SAT analogy questions, write Harry Potter fan fiction, and code CSS and SQL queries. We anchor the analysis of these development in the changing $8 trillion landscape of our public companies, and the tech cold war with China.

exc-61085344c5d97b2d6050eab4