With the explosion of Generative AI Sam Altman has become one of the most famous entrepreneurs on the planet. But not much has been written about his fintech investments.

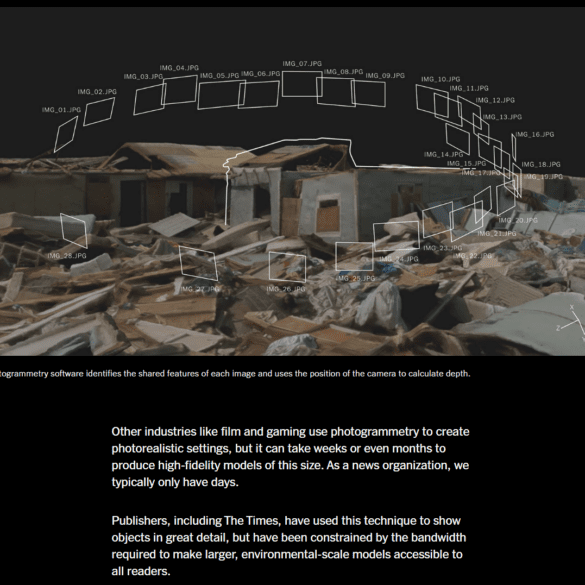

Within a decade, the form factor for computing will radically change from staring at screens with flat imagery, to participating in embedded virtual worlds with fully navigable, hyper-realistic environments. Those environments will be filled with software agents, some hybrid human and others entirely AI, that are entirely unrecognizable as anything but real to 90% of the population.

Instead, we are going to tap again into a new development in Art and Neural Networks as a metaphor of where AI progress sits today, and what is feasible in the years to come. For our 2019 “initiation” on this topic with foundational concepts, see here. Today, let’s talk about OpenAI’s CLIP model, connecting natural language inputs with image search navigation, and the generative neural art models like VQ-GAN.

Compared to GPT-3, which is really good at generating language, CLIP is really good at associating language with images through adjacent categories, rather than by training on an entire image data set.

OpenAI, backed with $1B+ by Elon Musk & MSFT, can now program SQL and write Harry Potter fan-fiction

This week, we look at a breakthrough artificial intelligence release from OpenAI, called GPT-3. It is powered by a machine learning algorithm called a Transformer Model, and has been trained on 8 years of web-crawled text data across 175 billion parameters. GPT-3 likes to do arithmetic, solve SAT analogy questions, write Harry Potter fan fiction, and code CSS and SQL queries. We anchor the analysis of these development in the changing $8 trillion landscape of our public companies, and the tech cold war with China.